Neural Style Transfer

- 4 minsNeural style transfer

Yesterday, I started experimenting with neural style transfer since I was really fascinated by the idea. I looked into Google Deepdream as well which is a different algorithm and started based on the Medium post from a Google intern on the Tensorflow projects. He basically provided a tutorial to move through the implementation. I also understood the original article from the authors (A Neural Algorithm of Artistic Style, Gatys, Ecker, Bethge).

The key points of the algorithm

Basically, they state that a neural network can learn very high- and low-level information about the structure of an image. Lower level reperesentations correspond to style while higher level features to content.

Basically getting some of the layers from a pre-trained neural network contains this information when we feed it with an image. Reconstructing the content while making sure that the style gets similair to the style image we provide. This can be done with a neat loss function.

The math behind all

Using a pre-trained neural network we can generate an image with the intended style. In the beginning we have the content image \(\vec{c}\) and the style image \(\vec{s}\). We want to put the style of the style image on top of the content image.

We should select style layers and content layers as well. For feature layers one should use low level reperesentations, while for content layers higher level ones.

In my setup I used a pre-trained VGG19 model that was suggested in the original article and used the layers block1_conv1, block2_conv1, block3_conv1, block4_conv1 for style and block5_conv2 for content reconstruction.

We want to make \(\vec{c}\) similair to \(\vec{s}\) in style. The algorithm does not change model weights instead in saves the content image at first as an initial image and updates it in each iteration with the gradients of the loss.

The loss is defined as to include content and style as well. The content loss is very simple basically using the initial image updated in each round, running it through the model and checking how far the content features are from the content features of the original content image \(\vec{c}\). In the equation below \(\vec{r}\) denotes the reconstructed image which we update at each iteration with the gradients:

\[L_{content}(\vec{c}, \vec{r}, l) = \frac{1}{2}\sum_{i,j}\Big( C^{l}_{ij} - R^{l}_{ij} \Big)^{2}\]Where \(C, R\) are the content features extracted after the images were fed to the model. And it is calculated at each layer. In the total loss, the weight of content loss from each layer counts with the same weight all adding up to 1.

The style loss is a bit more sophisticated as it is defined on the Gramian matrices of the style features of the reconstructed and updated image and the style fetures from the style image.

The gram matrix of input \(\vec{f}\) in layer \(l\) is defined as:

\[G^{l}_{ij} = \sum_{k}F^{l}_{ik}F^{l}_{jk}\]The dimensionality of the gram matrix is equal to the square of the input tensor’s filter size, since we multiply them as feture vectors. For the loss we need to define:

\[E_{l} = \frac{1}{4N_l^{2}M^{2}_{l}}\sum_{i,j}\Big( G_{ij}^{l} - A_{ij}^{l} \Big)^{2}\]Where $A$ is the gram matrix of style features from the artistic style image. The style loss is this \(E_{l}\) summed up for all \(l\) layers with the same weights summing to 1.

\[L_{style}(\vec{a}, \vec{c}) = \sum_{l = 0}^{\# styles} w_l E_l\]The total loss must be weighed preciesly to produce pretty style-transfered images:

\[L_{total}(\vec{c}, \vec{a}, \vec{r}) = \alpha L_{content}(\vec{c}, \vec{r}) + \beta L_{style}(\vec{a}, \vec{r})\]Where the ration between \(\alpha, \beta\) should be \(\approx 10^{-5}\).

My implementation is very similar to the colab notebook provided in the Medium article mentioned above. I implented it in an OOP fashion and created my images with style transfer.

I used different style and content layers for reconstruction and different images. The algorithm is very delicate and the right hyper-parameters must be chosen for pretty outputs so I actually copied those from the article.

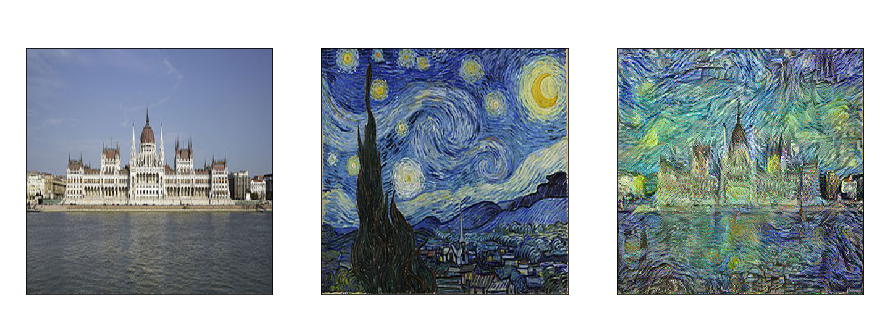

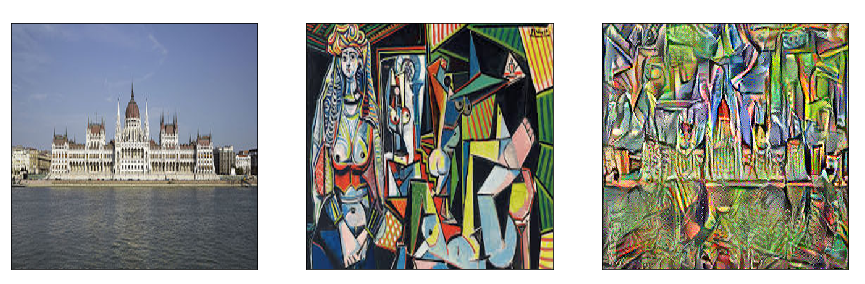

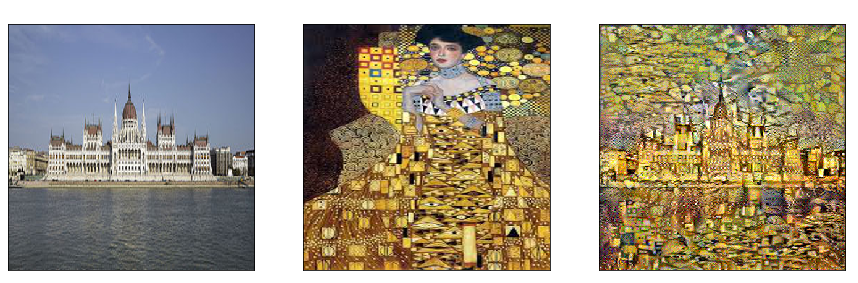

Since I am Hungarian I used the Hungarian parliament as the content image and tried to make it resemble for some style images such as Van Gogh’ - The starry night and others from Klimt, Picasso and Escher.

This was my first algorithm that changed the input instead of tuning parameters in the model. My implementation can be found here where I plan to include all future small projects that I’ll do for blog posts.

The code is implemented using Tensorflow and the Keras API that is already built into it. Here are my results:

Picasso style

Escher style

Klimt style

Van Gogh style