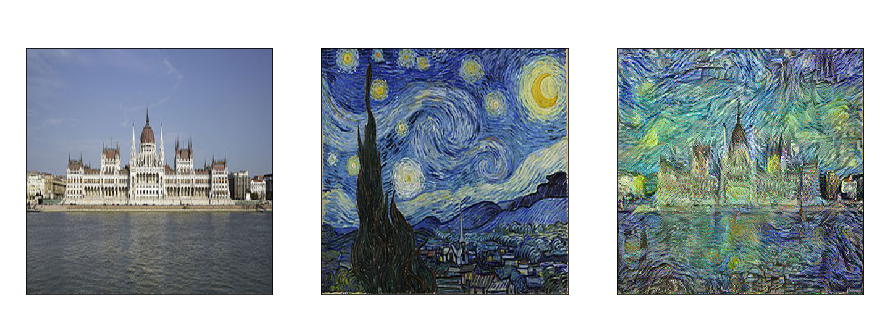

Fast neural style transfer III.

- 4 minsMulti-style transfer based on the fast stilization paper

I wrote about the original neural style transfer and the fast neural style transfer papers before in detail. The point of implementing fast-stylization was to use PyTorch (primarily) and to be able to create reproducible code and last but not least for fun.

Method

As discusses in earlier post and in the paper style transfer is an ill-defined problem since there is no concrete definition of style and content in image space as these are human concepts. What was done originally is that with the transformation of the image such that it resembles the style image by some metric and the original content image by some metric. This was already producing great results but each individual picture had to be optimized separately. With the fast neural transfer paper a new method has been proposed: use a style transfer network and instead of optimizing the image itself, optimize the weights of that network to produce the styled image from the input/content image.

In the fast-stylization paper they proposed a solution to include N styles into the same style transfer network via conditional instance normalization. Conditional instance normalization takes the content image and a categorical variable c = 1 - N and normalizes according to that style category. This way basically one can have style type dependent normalization inside the style transfer network and surprisingly this is enough to incorporate many styles into the same transfer net.

I used code from around everywhere and obviously implemented many on my own, here I present the key features in the code base that had been developed according to the supplementary section provided in the original paper:

Code

Conditional instance normalization: since for each category the normalization should be different this can be achieved with a learnable embedding table (basically a learnable lookup table for each style type) on which the normalization is based:

class CategoricalConditionalInstanceNorm2d(torch.nn.Module):

def __init__(self, num_features, n_styles):

super(CategoricalConditionalInstanceNorm2d, self).__init__()

self.num_features = num_features

self.instance_norm = torch.nn.InstanceNorm2d(num_features,

affine=False)

self.embed = torch.nn.Embedding(n_styles, num_features * 2)

self.embed.weight.data[:, :num_features].normal_(1, 0.02)

self.embed.weight.data[:, num_features:].zero_()

def forward(self, x, y):

out = self.instance_norm(x)

gamma, beta = self.embed(y).chunk(2, 1)

out = gamma.view(-1, self.num_features, 1, 1) * out + beta.view(

-1, self.num_features, 1, 1)

return out

The other trick is that the convolutional padding SAME in TensorFlow does not exist by default in PyTorch so based on the kernel and stride sizes the dimensions of the top, bottom, left and right paddings should have been manually calculated ofr each convolutional block:

self.conv1 = torch.nn.Conv2d(3, 32, (9, 9), stride=1, padding=0)s

self.padding1 = (4, 4, 4, 4)

###

self.conv2 = torch.nn.Conv2d(32, 64, (3, 3), stride=2, padding=0)

self.padding2 = (0, 1, 0, 1)

With this said, all there is reamining is to try. And you can do so! The trained model is availbe and executable via Docker and hosted on DockerHub. You just have to provide a short video (~15-20 seconds) and must posess a GPU with at least 4 GB of RAM in order to run it in reasonable amount of time (~10 minutes) to produce results in N = 8 styles from the input movie:

sudo docker pull qbear666/multi_style

sudo docker tag qbear666/multi_style:latest multi_style # rename it just for generality of running

sudo docker run \

--gpus all \

-v <path-to-the-movie-file>:/app/movie \

-v <your-desired-output-directory-for-the-movies>:/app/output/ multi_style

The nvidia-docker-toolkit must be installed as well. The code is available on GitHub.

Demo