Generative adversarial networks

- 8 minsIntroduction

Generative adversarial networks have been invented by Ian Goodfellow and his colleagues in 2014. He wrote about the in his Deep Learning book but currently they are everywhere with CycleGAN and DCGAN, Wasserstein-GAN, etc.

The basic idea is to generate images from a random noise vector that is sampled from some kind of an underlying distribution such as a Gaussian. Moving on one needs to feed this vector through layers of transforms to recreate a random image of the required size. Such image will get the label fake since it is generated by a GENERATOR network. Meanwhile a large collection of unlabeled data can be fed to the DISCRIMINATOR network which has the task to discriminate fake images from real images. Images that we present to the network are in a sense real that they are not generated but collected somehow.

realimage gets label1fakeimage gets label0

The discriminator network is a simple CNN that tries to learn to predict 0 s and 1 s in order to separate generated images from real input.

def _discriminator(self):

model = Sequential()

model.add(

Conv2D(64, (3, 3),

strides=(2, 2),

padding='same',

input_shape=self.input_shape))

model.add(LeakyReLU())

model.add(Dropout(0.3))

model.add(Conv2D(128, (5, 5), strides=(2, 2), padding='same'))

model.add(LeakyReLU())

model.add(Dropout(0.3))

model.add(Flatten())

model.add(Dense(1))

return model

The generator network is simply getting a random vector input for each image an generated images from that via convolutional and dense layers:

def _generator(self):

model = Sequential()

model.add(Dense(12 * 12 * 100, input_shape=(100, )))

model.add(BatchNormalization())

model.add(LeakyReLU())

model.add(Reshape((12, 12, 100)))

model.add(Conv2D(128, (3, 3), strides=(1, 1), padding='valid'))

model.add(BatchNormalization())

model.add(LeakyReLU())

# 10, 10, 128

model.add(UpSampling2D(size=(2, 2), interpolation='bilinear'))

model.add(Conv2D(64, (3, 3), strides=(1, 1), padding='valid'))

model.add(BatchNormalization())

model.add(LeakyReLU())

# 18, 18, 64

model.add(UpSampling2D(size=(2, 2), interpolation='bilinear'))

model.add(Conv2D(32, (5, 5), strides=(1, 1), padding='valid'))

model.add(BatchNormalization())

model.add(LeakyReLU())

# 32, 32, 32

model.add(Conv2D(1, (5, 5), strides=(1, 1), padding='valid'))

# 28, 28, 1

return model

It is used commonly that bilinear or nearest neighbor upscaling and a convolutional layer generally produces less checked-effect images than transpose- or deconvolution, so I am using that in order to acquire smoother generated pictures.

Bilinear upscaling simple increases the size of images by size[0], size[1] in width and in height , while convolution with valid padding reduces the output to (W - width/height, K- kernel size, S - stride):

The tricky part is the definition of the loss function and some other tweaks that are necessary to force the Lipsitz-constraint on the system. Basically only thing you need to do is to clip the gradients of the discriminator network to avoid exploding gradients and therefore approximately forcing the before mentioned constraint. To find out why look into the details of the Wasserstein-GAN.

The generator loss must be the negative cross entropy between the generated images a tensor of the same same with zeros, indicating fakeness.

def _generator_loss(self, generated):

return -tf.losses.sigmoid_cross_entropy(tf.zeros_like(generated),

generated)

The discriminator loss is the same as the generator loss with a negative sign, since we need to minimize that in order to maximize the realness of fake images, combined with the loss of forcing real images to be real with a cross entropy loss:

def _discriminator_loss(self, real, generated):

real_loss = tf.losses.sigmoid_cross_entropy(

multi_class_labels=tf.ones_like(real), logits=real)

generated_loss = tf.losses.sigmoid_cross_entropy(

multi_class_labels=tf.zeros_like(generated), logits=generated)

total_loss = real_loss + generated_loss

return total_loss

You must have noticed by now that we are using sigmoid_cross_entropy with logits instead of applying a sigmoid function to the output layer. This is due to the behaviour of GANs that they only work well without resricting the models with sigmoids at the end.

A custom training step with gradient clipping is applied using the Adam optimizer in Tensorflow:

def _train_step(self, images, batch_size, noise_dim=100):

noise_vec = tfd.Normal(loc=[0.] * noise_dim,

scale=[1.] * noise_dim).sample([batch_size])

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

generated = self.generator(noise_vec)

real_out = self.discriminator(images)

gen_out = self.discriminator(generated)

gen_loss = self._generator_loss(gen_out)

disc_loss = self._discriminator_loss(real_out, gen_out)

grads_of_gen = gen_tape.gradient(gen_loss, self.generator.variables)

grads_of_disc = disc_tape.gradient(disc_loss,

self.discriminator.variables)

tf.train.AdamOptimizer(1e-3).apply_gradients(

zip(grads_of_gen, self.generator.variables))

capped_disc_grad = [

tf.clip_by_value(grad, -1., 1.) for grad in grads_of_disc

]

tf.train.AdamOptimizer(1e-3).apply_gradients(

zip(capped_disc_grad, self.discriminator.variables))

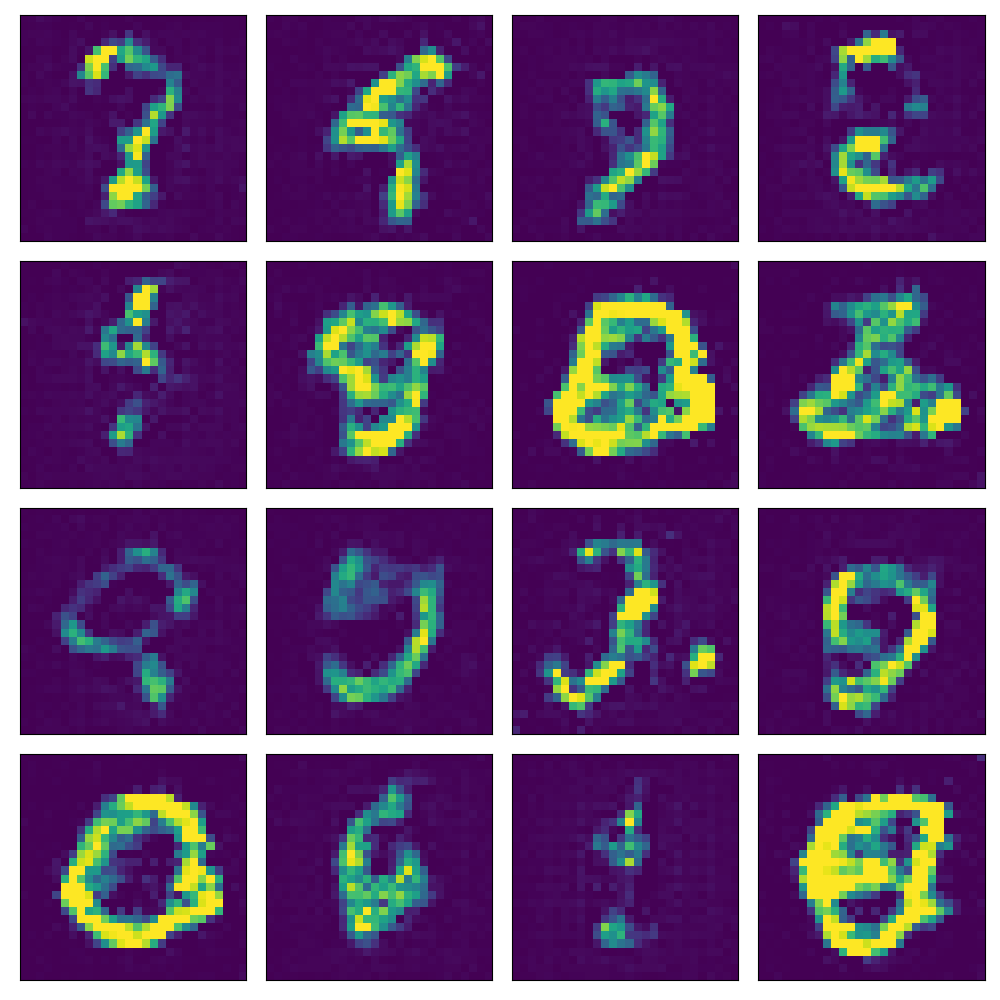

The random noise vector is sampled at each training step but I also created a (16, 100) random noise vector that I sampled at the beginning and made random reconstructions during training to monitor how well the system learns to generate fake images. I used the MNIST dataset since images with higher moments need larger and more advanced architectures but simple GANs work ‘well’ on these images:

After 50 epochs with a batch size of 128 and a learning rate of 1e-3:

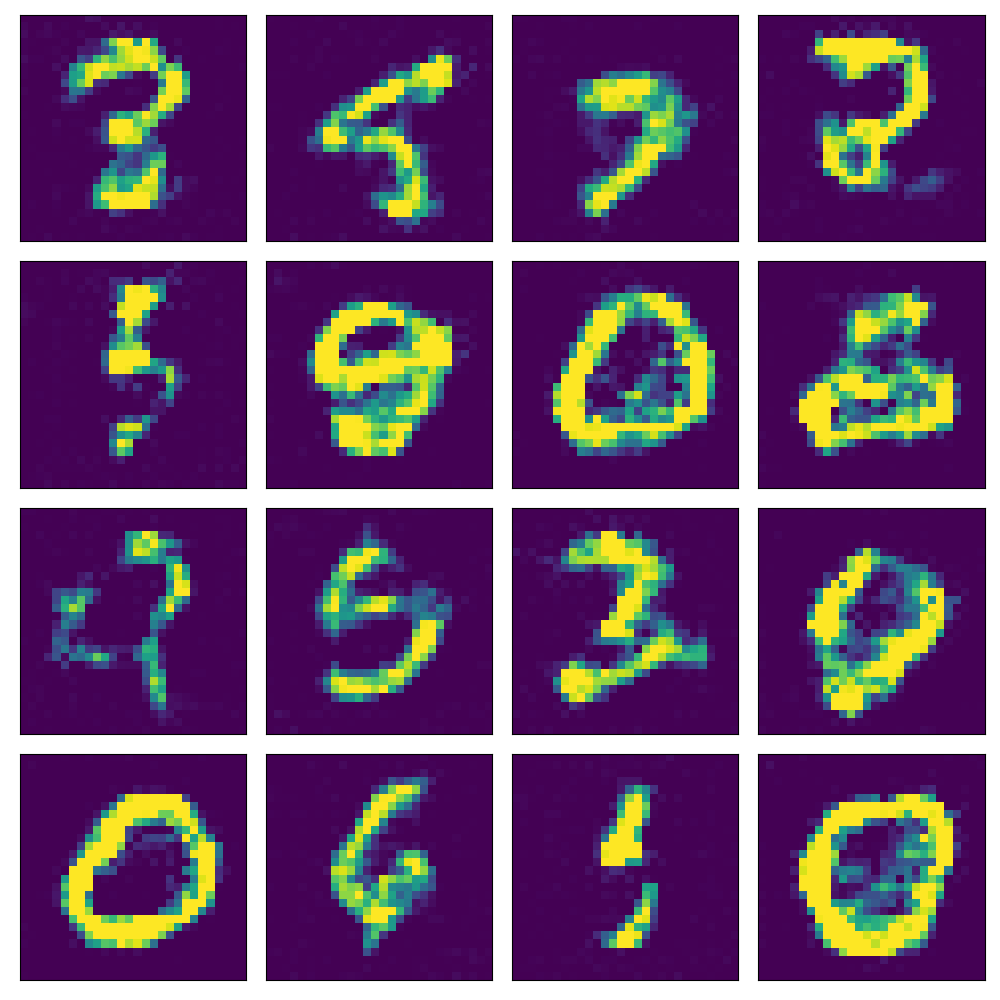

With the same parameters after 100 epochs:

It can be seen that it works.:)

My implementation was heavily influenced by the one on Tensorflow’s page and the suggestions came from my group on some implementations details such as gradient clipping and bilinear upsampling.