Neural networks from scratch rebooted

- 14 minsNeural networks from scratch - again

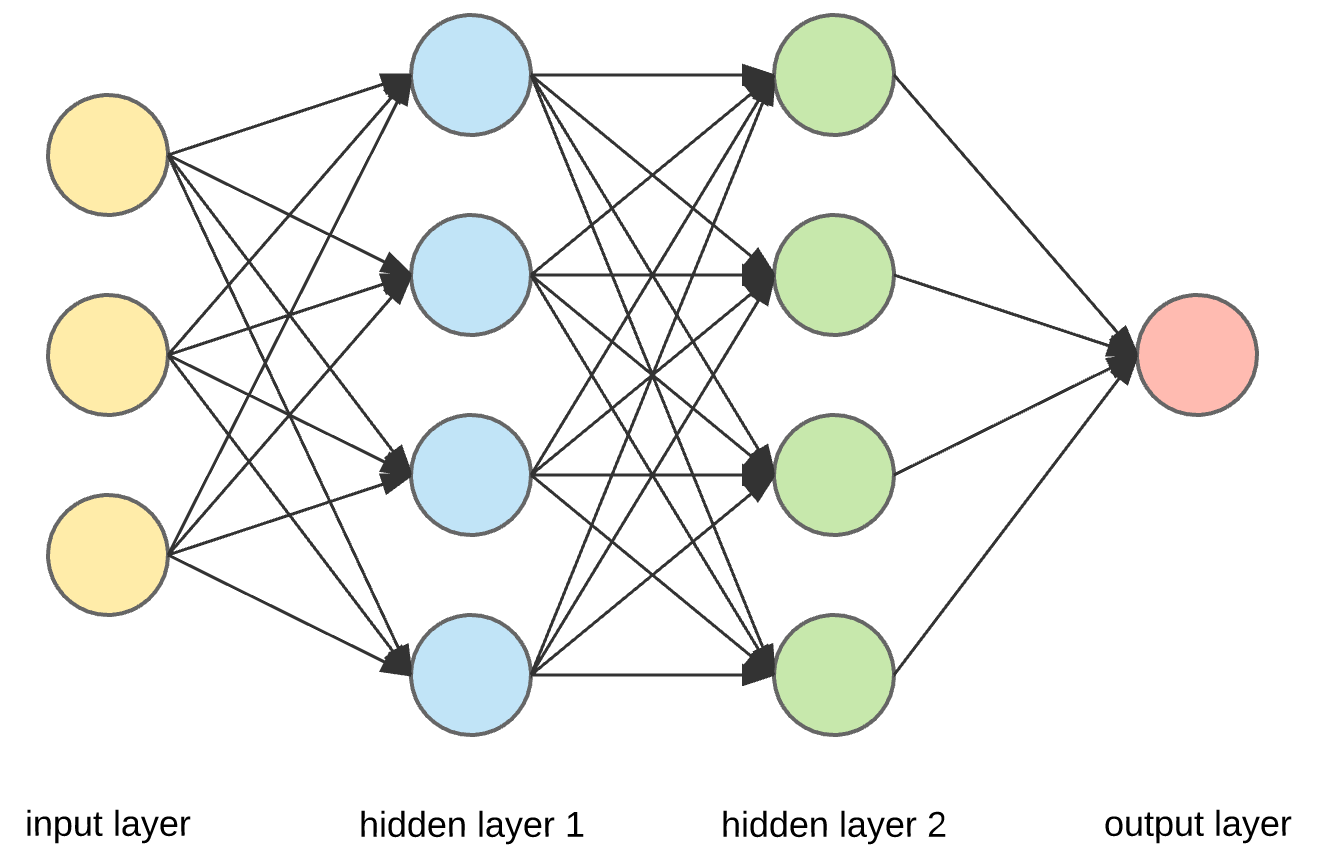

I’ve did this a while ago but I want to clarify this topic with full derivation and making a Keras like API in the same time. I intend to build up understanding of this concept and make it crystal clear to the reader. In the first part I’ll go through the basic derivations and explain their meaning. After that I’ll present some code of abstract activation function and layer classes with their specific implementations. Moving on I’ll test the activation functions and create a Keras like model class to hold the layers and output a summary and be able to fit and test a model.

Derivation

As we’ve seen in the previous post of logistic regression the goal is to get the derivative of the weights and biases in each layer by applying the chain rule. So if we have an activation function f and weights W and biases b then the applying the chain rule for the last layer the following is applicable:

\[A^{[1]} = W^{[1]}X + b^{[1]}\] \[Z^{[1]} = f^{[1]}\big(A^{[1]}\big)\] \[...\]For a one layer computation, the next layer is very similair, in general:

\[A^{[n]} = W^{[n]}Z^{[n-1]} + b^{[n]}\] \[Z^{[n]} = f^{[n]}\big(A^{[n]}\big)\]Taking the last layer, the derivative of the loss term is by the last output is:

\[\frac{\partial L}{\partial Z^{[N]}_{ij}}\]I need to note here that this is the place where a general loss function can come and define this derivative as neeeded as long as it is differentiable. This is the derivate we have to pass back to the network and we can calculate all the other derivatis in sequence. Sticking to the last layer:

\[\frac{\partial L}{\partial A^{[N]}_{ij}} = \sum_{ij}\frac{\partial L}{\partial Z^{[N]}_{ij}} \frac{\partial Z^{[N]}_{ij}}{\partial A^{[N]}_{pq}} = \sum_{ij} \frac{\partial L}{\partial Z^{[N]}_{ij}} f^{' [N]}\big(A_{ij}^{[N]}\big)\delta_{ip}\delta_{jq}\]For the weights and biases:

\[\frac{\partial L}{\partial W^{[N]}_{st}} = \sum_{ijpq}\frac{\partial L}{\partial Z^{[N]}_{ij}} \frac{\partial Z^{[N]}_{ij}}{\partial A^{[N]}_{pq}} \frac{\partial A^{[N]}_{pq}}{\partial W^{[N]}_{st}}= \sum_{ijpq} \frac{\partial L}{\partial Z^{[N]}_{ij}} f^{' [N]}\big(A_{ij}^{[N]}\big)\delta_{ip}\delta_{pq}\delta_{sp}Z^{[N-1]}_{tq} = \sum_{q} \frac{\partial L}{\partial Z^{[N]}_{sq}} f^{' [N]}\big(A_{sq}^{[N]}\big) \big(Z^{[N-1]}\big)^{T}_{qt}\]Where the first part is clearly a pairwise multiplication between the two operands and the last is a matrix multiplication between the remaining two. For b it is a bit tricky since it is a vector and is added to all matrix element as one but should be a matrix if correctly used as (b, b, …, b):

\[\frac{\partial L}{\partial b^{[N]}_{st}} = \sum_{ijpq}\frac{\partial L}{\partial Z^{[N]}_{ij}} \frac{\partial Z^{[N]}_{ij}}{\partial A^{[N]}_{pq}} \frac{\partial A^{[N]}_{pq}}{\partial b^{[N]}_{st}}= \sum_{ijpq} \frac{\partial L}{\partial Z^{[N]}_{ij}} f^{' [N]}\big(A_{ij}^{[N]}\big)\delta_{ip}\delta_{pq}\delta_{sp}\delta_{tq} = \frac{\partial L}{\partial Z^{[N]}_{st}} f^{' [N]}\big(A_{st}^{[N]}\big)\]But since b in reality only consists of a single index s this becomes a tricky mean operation:

\[\frac{\partial L}{\partial b^{[N]}_{st}} = \frac{\partial L}{\partial Z^{[N]}_{st}} f^{' [N]}\big(A_{st}^{[N]}\big) = np.mean\Big(\frac{\partial L}{\partial Z^{[N]}} f^{' [N]}\big(A^{[N]}\big)\Big)\]import numpy as np

import abc

In code

First I create an abstract ActivationFunction class that has a __call__ and a backprop method. This is a backbone that basically is needed for an activation function but could be modified such as in Keras to become an independent layer in itself.

class ActivationFunction(abc.ABC):

@abc.abstractmethod

def __call__(self, x):

pass

@abc.abstractmethod

def backprop(self, x):

pass

class ReLU(ActivationFunction):

def __call__(self, x):

return np.maximum(x, 0)

def backprop(self, x):

return np.maximum(x / np.abs(x + 1e-12), 0)

class Sigmoid(ActivationFunction):

def __init__(self):

self.sigmoid = lambda x : 1. / (1. + np.exp(-x))

def __call__(self, x):

return self.sigmoid(x)

def backprop(self, x):

return self.sigmoid(x) * self.sigmoid(-x)

class Linear(ActivationFunction):

def __call__(self, x):

return x

def backprop(self, x):

return x

In here an abstract layer has a dictionary of possible activation function names to make life easier. After that the dense block implements the above mentioned forward pass and the backward pass in numpy.

class Layer(abc.ABC):

def __init__(self):

self.activations = {"relu" : ReLU(), "sigmoid" : Sigmoid(), "linear" : Linear(), None : Linear()}

@abc.abstractmethod

def __call__(self, x):

pass

@abc.abstractmethod

def backprop(self, x):

pass

class DenseBlock(Layer):

def __init__(self, dim_out, activation=None):

super().__init__()

self.dim_out = dim_out

self.act_fn = self.activations[activation]

def create_weights(self, dim_in):

self.dim_in = dim_in

self.W = np.random.randn(self.dim_out, dim_in) * np.sqrt(2 / (dim_in + self.dim_out))

self.b = np.random.randn(self.dim_out, 1) * np.sqrt(1 / (self.dim_out))

def __call__(self, x):

self.z_prev = x

self.act = np.matmul(self.W, self.z_prev) + self.b

return self.act_fn(self.act)

def backprop(self, dprev):

act_deriv = self.act_fn.backprop(self.act)

dW = np.matmul(dprev * act_deriv, self.z_prev.T)

db = np.mean(dprev * act_deriv, keepdims=True)

dprev_new = np.matmul(self.W.T, dprev * act_deriv)

return dprev_new, dW, db

def apply_grads(self, dW, db, lr):

self.W -= lr * dW

self.b -= lr * db

def __str__(self):

return "DenseBlock : (%s, %s)" % (self.W.shape[1], self.W.shape[0])

The model implements the chaining of layers currently only supporting binary classification and dense blocks but fully self initializes from definition and data.

class Model:

def __init__(self, input_size, lr=1e-5):

self.layers = []

self.lr = lr

self.input_size = input_size

self.current_input_size = input_size

def add(self, layer):

layer.create_weights(self.current_input_size)

self.layers.append(layer)

self.current_input_size = layer.dim_out

def __call__(self, x):

self.current_input_size = None

for layer in self.layers:

x = layer(x)

return x

def backprop(self, delta):

for layer in self.layers[::-1]:

delta, dW, db = layer.backprop(delta)

layer.apply_grads(dW, db, self.lr)

return delta

def summary(self):

param_count = 0

print('MODEL:')

for layer in self.layers:

param_count += layer.dim_in * layer.dim_out + layer.dim_out

print(layer, '\tparams : %d' % (layer.dim_in * layer.dim_out + layer.dim_out))

print('Total trainable parameters : %d' % param_count)

def fit(self, X, y, batch_size, epochs, validation_set=None):

if validation_set != None:

val_X, val_y = validation_set

def batch_generator(X, y, batch_size):

random_indices = np.random.choice(range(0, X.shape[0]), size=X.shape[0], replace=False)

arr = X[random_indices]

lab = y[random_indices]

for i in range(0, arr.shape[0], batch_size):

yield arr[i:i+batch_size].T, lab[i:i+batch_size].T

def binary_loss(y_true, y_pred):

return (y_pred - y_true) / (y_pred * (1 - y_pred))

for epoch in range(epochs):

for batch, batch_labels in batch_generator(X, y, batch_size):

y_pred = model(batch)

dprev = binary_loss(batch_labels, y_pred)

loss = np.mean(- (batch_labels * np.log(y_pred + 1e-12) + (1-batch_labels) * np.log(1-y_pred + 1e-12)))

_ = model.backprop(dprev)

if (epoch + 1) % 100 == 0 and validation_set != None:

print('EPOCH %d | Loss : %.5f' % (epoch + 1, loss), end='\t|\t')

val_pred = model(val_X)

val_pred[val_pred >= 0.5] = 1

val_pred[val_pred < 0.5] = 0

accuracy = np.sum(val_pred == val_y) / val_y.shape[1]

print('Validation accuracy : ', accuracy)

from sklearn.datasets import load_breast_cancer

from sklearn.preprocessing import StandardScaler

import random

X, y = load_breast_cancer(return_X_y=True)

y = y.reshape(y.shape[0], 1)

X = StandardScaler().fit_transform(X)

X.shape, y.shape

((569, 30), (569, 1))

model = Model(input_size=30, lr=1e-4)

model.add(DenseBlock(128, "relu"))

model.add(DenseBlock(64, "relu"))

model.add(DenseBlock(32, "relu"))

model.add(DenseBlock(1, "sigmoid"))

model.summary()

batch_size = 100

epochs = 1000

test_X = X[:100].T

test_y = y[:100].T

MODEL:

DenseBlock : (30, 128) params : 3968

DenseBlock : (128, 64) params : 8256

DenseBlock : (64, 32) params : 2080

DenseBlock : (32, 1) params : 33

Total trainable parameters : 14337

model.fit(X[100:], y[100:], batch_size=batch_size, epochs=epochs, validation_set=(X[:100].T, y[:100].T))

EPOCH 100 | Loss : 0.04224 | Validation accuracy : 0.94

EPOCH 200 | Loss : 0.02394 | Validation accuracy : 0.95

EPOCH 300 | Loss : 0.08384 | Validation accuracy : 0.96

EPOCH 400 | Loss : 0.03821 | Validation accuracy : 0.96

EPOCH 500 | Loss : 0.06698 | Validation accuracy : 0.97

EPOCH 600 | Loss : 0.01258 | Validation accuracy : 0.97

EPOCH 700 | Loss : 0.03756 | Validation accuracy : 0.97

EPOCH 800 | Loss : 0.02036 | Validation accuracy : 0.97

EPOCH 900 | Loss : 0.00545 | Validation accuracy : 0.96

EPOCH 1000 | Loss : 0.01226 | Validation accuracy : 0.96

Just for checking our understanding let’s try the Keras API in Tensorflow whether it gives the same summary as our model and just to validate how similar our implementation is (although probably much simpler).

import tensorflow as tf

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(128, activation='relu', input_shape=(None, 30)),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, None, 128) 3968

_________________________________________________________________

dense_1 (Dense) (None, None, 64) 8256

_________________________________________________________________

dense_2 (Dense) (None, None, 32) 2080

_________________________________________________________________

dense_3 (Dense) (None, None, 1) 33

=================================================================

Total params: 14,337

Trainable params: 14,337

Non-trainable params: 0

_________________________________________________________________